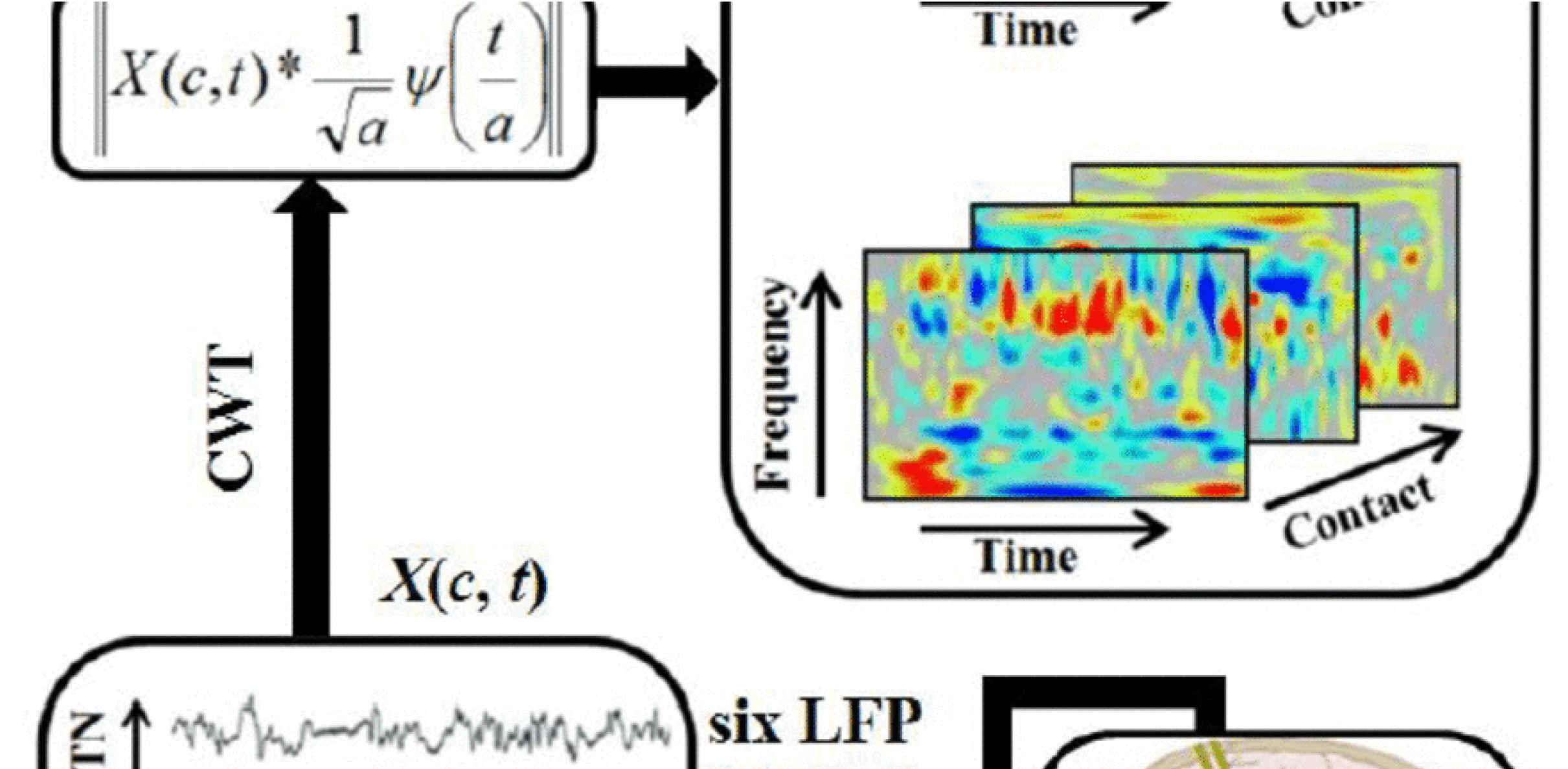

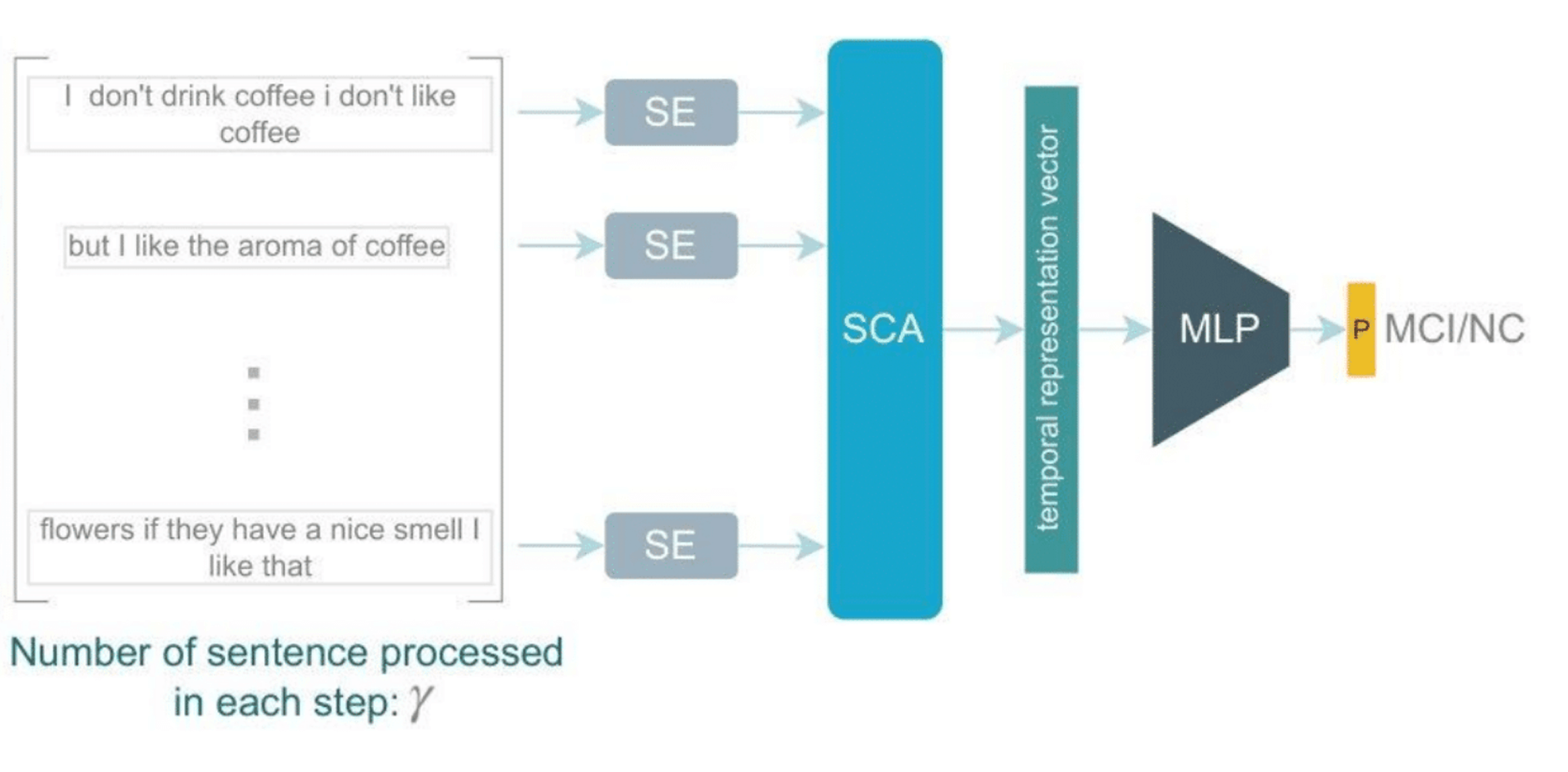

MCID using DeepLearning Methods and Non-invasive Data

Cognitive Impairment Detection & Deep Learning

To learn more about this innovative approach to detecting Mild Cognitive Impairment, view the full article published on arXiv.

View Project & Publications

5 min

5 min