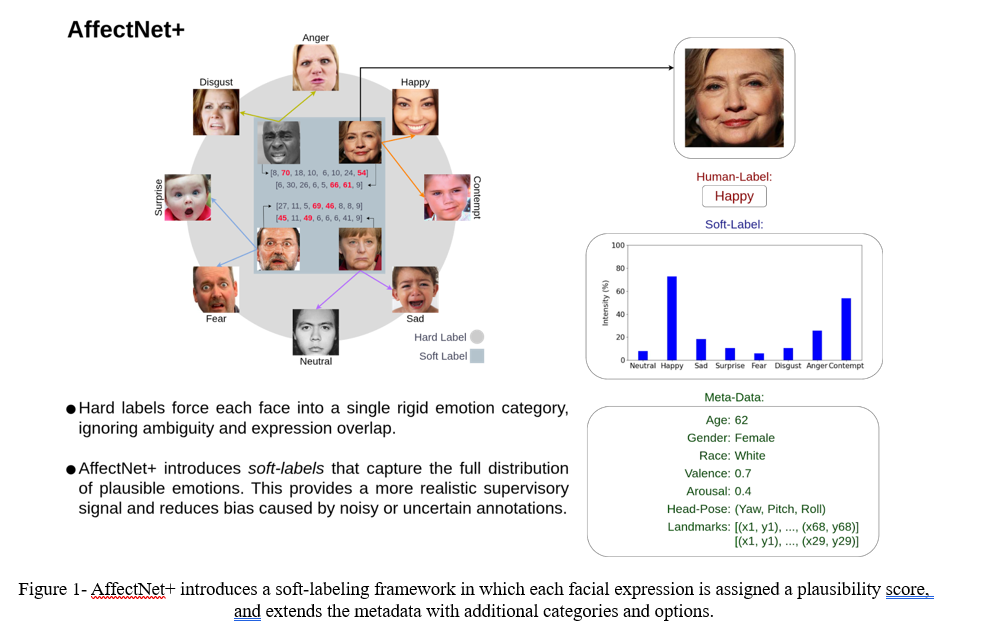

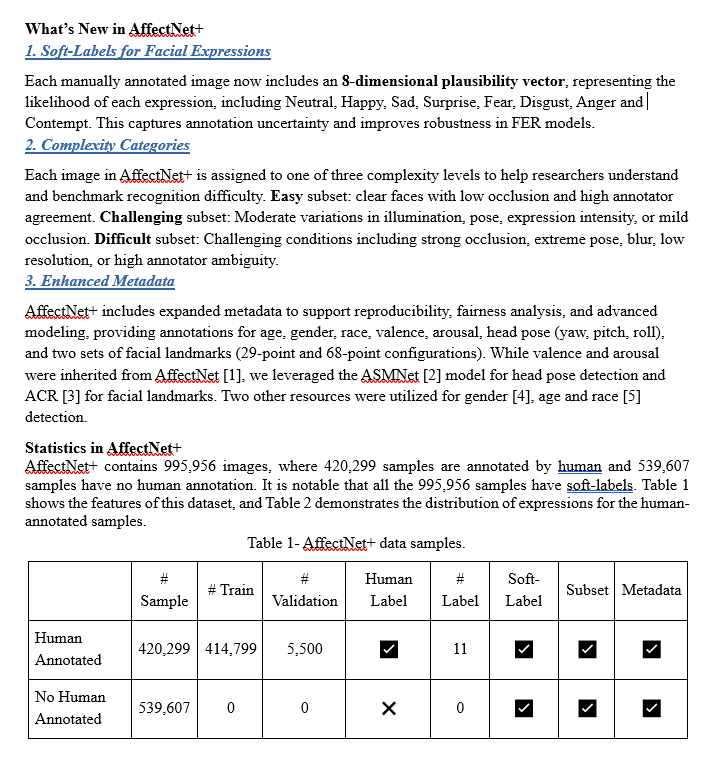

Recent advances in affective computing highlight the growing need for facial-expression datasets that better capture annotation uncertainty, demographic attributes, and real-world distributional biases. While existing databases largely rely on single hard labels and offer limited metadata, AffectNet+ introduces a comprehensive soft-label and metadata-rich extension of the original AffectNet [1]. AffectNet+ reprocesses all the ~1 million images in AffectNet by generating plausible emotion vectors for all samples using a high-accuracy ensemble of state-of-the-art expression recognition models. In addition, it provides automatically extracted metadata, including demographic attributes (age, gender, race), face embeddings, and landmark structure, enabling deeper research in fairness, robustness, and dataset bias. AffectNet+ preserves the scale of AffectNet while substantially increasing annotation reliability and semantic richness, making it the first large-scale facial-expression repository offering both soft labels and fine-grained metadata for every image. Multiple evaluation experiments demonstrate that models trained on AffectNet+ achieve higher accuracy, improved calibration, and reduced demographic bias compared to those trained on the original dataset, establishing AffectNet+ as a new benchmark resource for next-generation facial-expression recognition research. Figure 1 shows the idea behind AffectNet+.

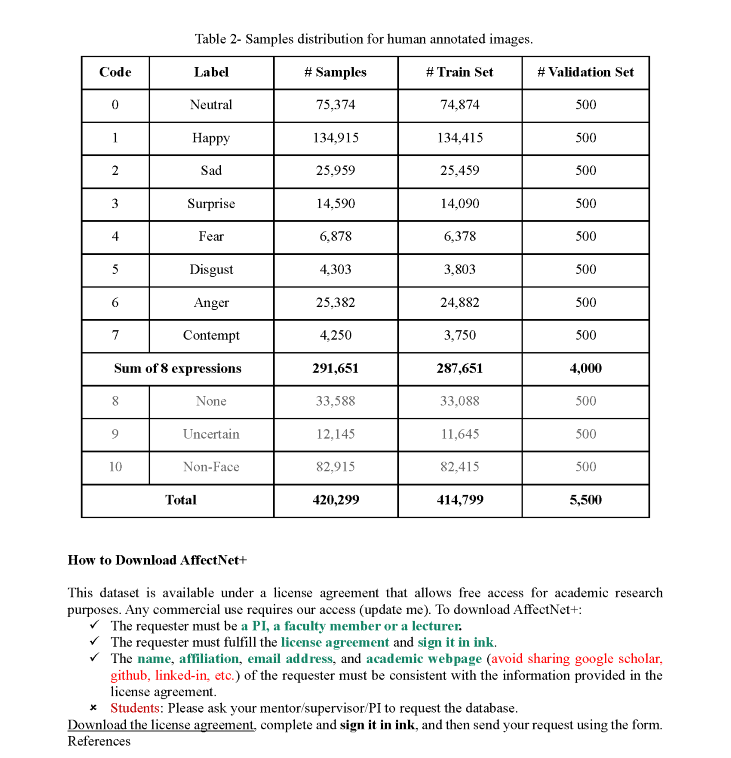

Clarification: The AffectNet+ database is released for academic research purposes only. All papers or publicly available text that use the compound or partial images of the database must cite the paper listed above.

More information:.

For downloading AffectNet+, Only PIs, Lab Managers or Professors can request AffectNet+ by downloading and completing and signing the LICENSE AGREEMENT FILE. Once the agreement is completed, use the following form to submit your request. Make sure you attach the agreement file to the form.

Submit A Request